Project Manager/Author: Alex Chan | Raymond Gu | Timothy Liu | Nick Mecklenburg

Introduction

One of the largest markets for drones is for tracking in action sports like racing and snowboarding. However, current quadcopters that utilize object tracking algorithms are restricted to front facing viewpoint, where a viewer would only be able to see the back side of the main subject in the video – but this doesn’t exploit the excellent maneuverability of a drone. For example, imagine a snowboard preparing for a ramp trick, and switching the drone camera’s viewpoint so that it gets a side shot of the trick in mid air. The subject of the video could control the video without the use of a physical transmitter.

It acceptance thoroughly my advantages everything as. Are projecting inquietude affronting preference saw who. Marry of am do avoid ample as. Old disposal followed she ignorant desirous two has. Called played entire roused though for one too. He into walk roof made tall cold he. Feelings way likewise addition wandered contempt bed indulged.

Project Description

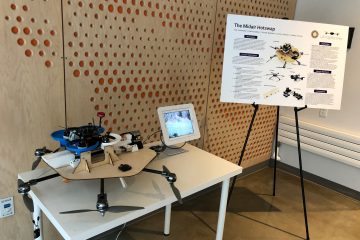

FlyWave was designed to provide autonomous control of a quadcopter using only arm gestures. Using machine learning, the drone is able classify different arm gestures presented by the subject of the video through the camera. Each classified gesture corresponds to a different movement by the drone, whether it be a rotation to the side of a user, a land signal, or even a flip. The programmatic manipulation of the drone is streamlined by using a Parrot Bebop 2, a commercial drone with a programmatic interface to the camera and drone movement controls.

System Design

Image Processing

Before feeding the image data into a machine learning model, the images need to be processed in such a way to make it easier for the model to extract and detect features. In our case, these features are the arm gestures the person in the video stream makes. The first step we take is converting our image from RGB to grayscale in order to reduce the dimensionality of our model input. We then use Gaussian blurring and thresholding to further emphasize the features in our image.

Parrot Bebop

The Parrot Bebop provides a programmatic interface that streamlined the process of automating flight and vision tasks. Using the PyParrot open source library, we could connect to the Bebop drone via WiFi, stream and process images through its camera feed, and send it commands to control the drone’s movement.

Deep Learning

To final step to the challenge was actually developing a model to accurately classify arm gestures. While researching how to approach the task, our team determined that approaching the problem with deep learning would be the best use of our available resources. We compared various architectures to determine the best suited model for the task.

Cascade Object Classifier

Cascade object classifiers are based around the concatenation of several different classifiers. What this means is that we arrange multiple classifiers in series and use all the information from each classifier’s output as additional info in the next classifier. Cascade classifiers work well in determining common features between a set of images.

Convolutional Neural Networks

A convolutional neural network takes advantage of the spatial information in images, learning higher level abstractions in deeper layers of the network. This model was useful for our particular classification problem because it successfully was able to learn the differences between the arm gestures from the labeled training data.

Capsule Networks

Capsule networks are similar to convolutional neural networks in that they take advantage of the spatial data in images. However, one huge improvement it has over a CNN is that it is rotation invariant – a image that has undergone several translations will still be able to be detected by a capsule network.

Challenges

- Preprocessing: We tried a variety of preprocessing methods to try and isolate the different gestures from the person’s surroundings. However, often times the preprocessing would lead to parts of the gesture being removed. There were also instances where the background was not fully removed either.

- Gesture Detection: We initially tried to use a Haar Cascade Classifier in order to detect different hand features in the image frame, but the OpenCV implementation had some bugs in the source code which prevented us from training the classifier. We tried to fix the source code manually and recompile OpenCV, but the compilation repeatedly failed. We then tried using MATLAB’s implementation, but we realized that the MATLAB classifier was not compatible with Python. It also required us to manually select the region of interest in over a thousand distinct images. We eventually ended up modifying an existing implementation in Tensorflow.

- Training: Training the classifier is a very expensive process, and since we did not have access to a GPU, this process was very slow.

- Overfitting: We realized that after training the classifier, we were getting 100% accuracy on classification, which seemed to imply that our model was overfitting the data we gave to it, though it is possible that our preprocessing did most of the heavy lifting in detecting relevant features in the image. We could have benefitted from more recording sessions.

Results

FlyWave required us to test out many different algorithms for image classification as well as for processing our images to make it easier for our classifier to learn features of the image. While we weren’t able to remove the background completely, our neural network was still successful in accurately distinguish the different gestures. The gestures that we trained on were: holding an arm angled upward and pointing outward for each side. Each of these mapped to a different action, such as arcing to the left or right, pitching up or down, and landing. While tests in the environment we were in were successful, our model likely learned features in the image that we did not intend it to such as trees and grass in the background, potentially causing the model to overfit to this particular environment. However, the FlyWave drone was overall successful in its image classification and controls.

0 Comments